Recently, when customers began complaining that their vehicles with driver-assistance technologies were “phantom braking” or slamming on the brakes without any visible obstacles present, researchers at Michigan State University wanted to learn more about this phenomenon — why it happens and how to stop it.

“Frequent phantom braking incidents can erode confidence in autonomous driving technologies,” said Qiben Yan, an assistant professor in the College of Engineering. “If riders perceive the technology as unpredictable or unreliable, they’ll be less likely to embrace it.”

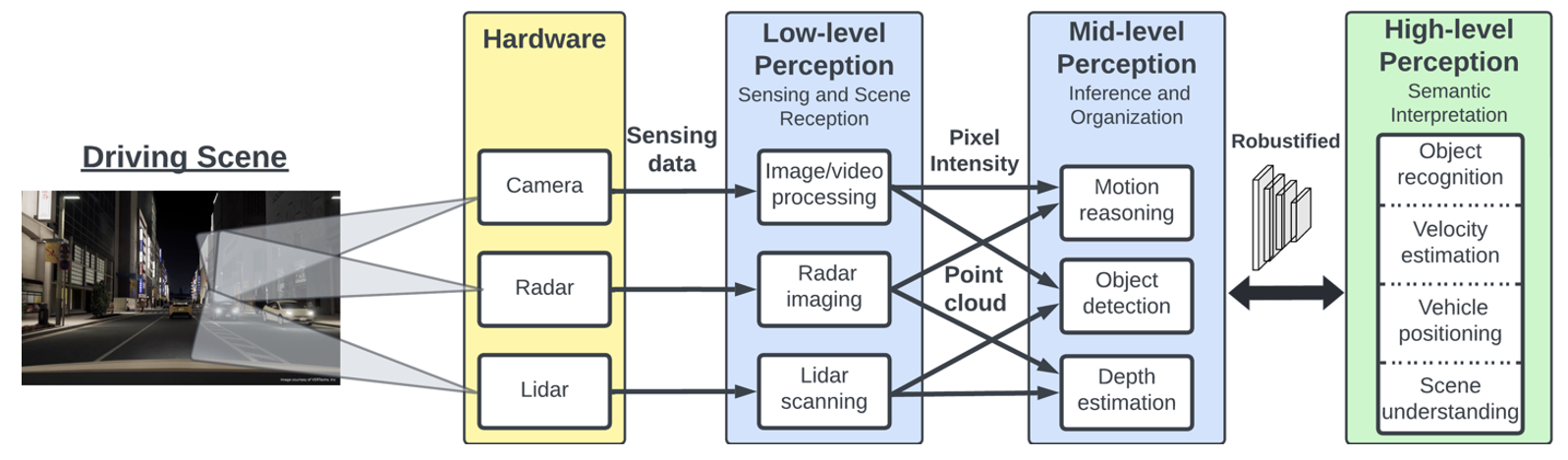

Autonomous vehicles have a vision system, composed of multiple cameras and radar, that uses radio waves to gather information the car leverages to navigate the world around it. In previous research, Yan and his team were able to show how the cameras in these vision systems can be deceived by hackers.

“We projected lights into the cameras of the vehicle, and the camera recognized this as a false object and hit the brakes; it is surprising how fake things can be created out of nowhere,” said Yan. “We were also able to make an object in front of the car disappear to the camera so that the vehicle couldn’t see an obstacle, and the vehicle hit the object.”

This new NSF grant — with Associate Professor Sijia Liu and MSU Research Foundation Professor Xiaoming Liu from MSU, and in collaboration with Virginia Tech — will help the researchers expand their knowledge by studying how cameras see these phantom attacks and identify ways to keep these vision systems more secure and resilient against nefarious attacks.

Yan and his team are using some ideas from the field of neuroscience that study how human eyes and brains are tricked by optical illusions.

“We borrowed some ideas from human perception studies to understand the AI systems behind the vision system,” said Yan. “We know that vision systems can be deceived, and we want to program the AI model to see the environment and interpret the information more accurately.”

There are three levels of perception. Low-level perception means the vision system can see the scene in front of it, and mid-level perception recognizes what an object is and can determine how far away it is. In high-level perception, the system can recognize how fast an object is moving and how the object is part of the scene.

“We try to understand the evolution of perception from one level to the next,” said Yan. “Next, we want to develop a defense that ensures inherent security and to enhance the AI model that powers it.”

This research will help make autonomous vehicles smarter and safer in the future.

“Addressing the phantom braking issue is not just about refining the vision technologies, it’s about the overall viability, safety and success of autonomous vehicles in the future,” said Yan.