A new level of synergy

Underlying this assertion is the fact that roughly 90% of fatal roadway collisions are the result of human error, Radha says.

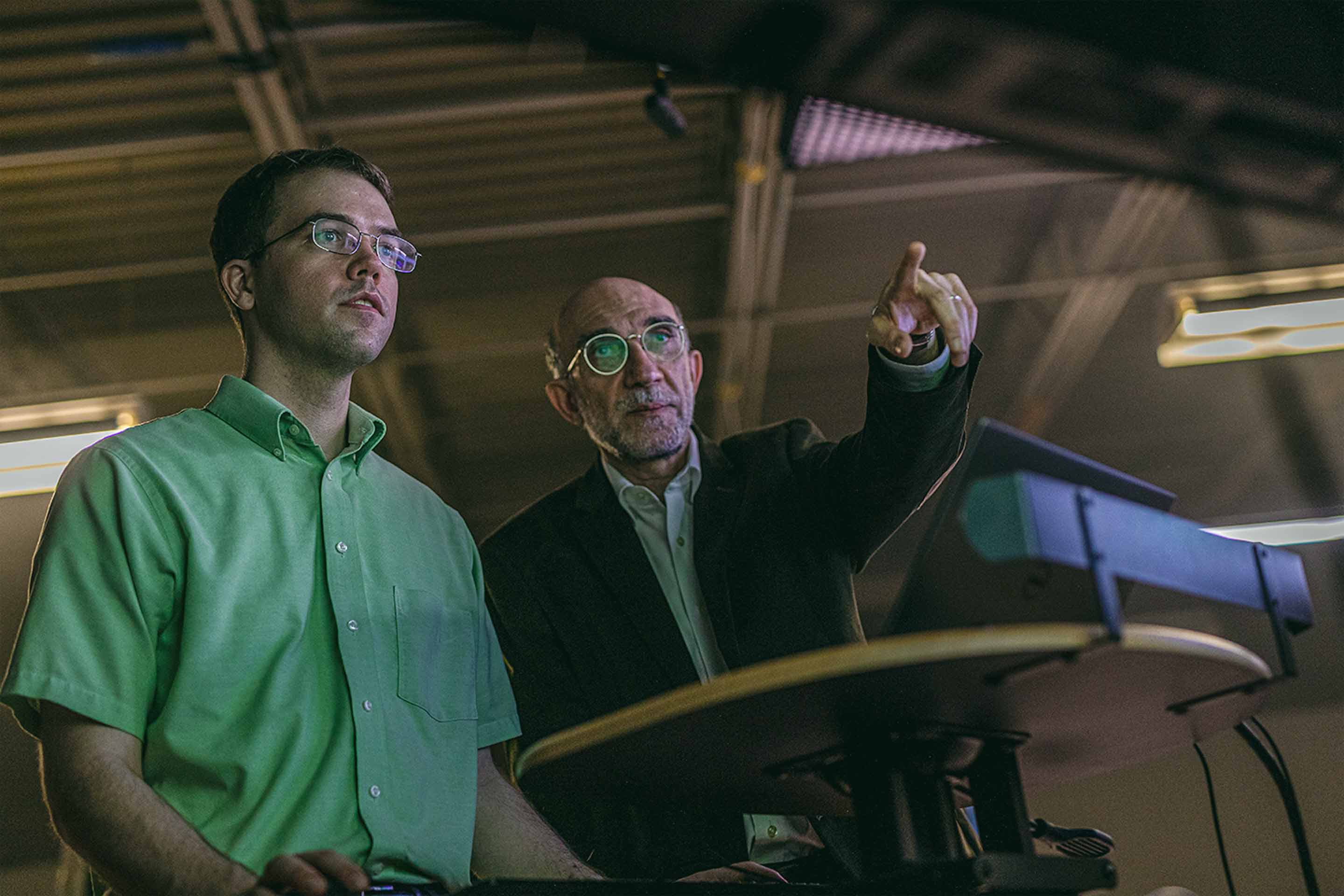

MSU Foundation Professor Hayder Radha (right) and doctoral student Daniel Kent (left) work in the Connected and Autonomous Networked Vehicles for Active Safety, or CANVAS, lab. Credit: Michigan State University

The promise of autonomous vehicles is that they will prevent those errors by taking human decision-making out of the equation. The rub is that removing that component would also mean cutting out things that humans do really well to sense and react to their environments.

Yet technology can make up for that, as evidenced by the role autonomy has in our lives today.

For people in California or the southwestern U.S., driverless vehicles might already be serving as taxis and delivery trucks. More globally, though, autonomous features that adjust cruise control speeds, keep drivers from drifting out of their lanes and monitor blind spots to prevent collisions are becoming more commonplace.

Increasingly affordable sensors and sophisticated software have enabled researchers to replicate what humans do. In some cases, these systems can outperform humans.

“Humans can really only focus on one thing at a time,” says Daniel Morris, a colleague of Radha’s in CANVAS and an associate professor in the Department of Electrical and Computer Engineering. He also has a joint appointment in Biosystems and Agricultural Engineering.

“An autonomous vehicle will have sensors looking in all directions at all times. And they can’t get distracted. That right there has a great potential for safety,” Morris says. “We can also combine more modalities to improve the sensing. People aren’t so good at seeing through fog, rain and snow. But we have radar, thermal infrared cameras and some lidar that can do those things.”

MSU Associate Professor Daniel Morris presents at a 2019 event in the Connected and Autonomous Networked Vehicles for Active Safety, or CANVAS, lab space. Credit: Courtesy of MSU College of Engineering

No one sensor is perfect on its own and one of the strengths of the CANVAS team is in what’s known as multimodal sensor fusion. That is, CANVAS researchers are developing algorithms to enable autonomous vehicles to better and more seamlessly fuse data captured by different devices.

For example, video cameras take images of a 3D world and condense them into two dimensions. But autonomous vehicles need to account for all three.

Light detection and ranging, or lidar, technology can determine how far away objects are by shining laser light on them and detecting how long it takes that light to return. Combining cameras with lidar thus gives a vehicle vision with depth perception.

Another example that Morris’s team is currently working on combines radar and video.

“Radar is already used in some cars right now for collision avoidance. By measuring distance to objects as well as incoming velocity, a radar-based system can predict an impending collision and automatically activate brakes,” Morris says.

So, radar is incredibly useful, but it’s not equally useful in all directions. Imagine a car traveling into a four-way intersection from the south with a radar attached to its front like a hood ornament. The radar is going to detect and predict vehicle motion coming from the north better than it would a vehicle traveling east to west.

“Radar has a weakness with cross traffic,” Morris says. “The question became could we upgrade radar for cross traffic.”

By combining radar information with video data, Morris and his team showed it’s possible to capture the full velocity profile — front to back, side to side — of other vehicles on the road.

Both projects illustrate the advantages of being able to combine multiple sensor inputs, as well as the advantages of MSU’s researchers having connections with the automotive industry. Morris’s project with lidar was supported by Changan Automobile and his recent radar work was inspired by Ford Motor Company.

“I wasn’t thinking about radar and camera fusion until we talked to Ford. They had seen what we did with lidar and asked if we could do it for radar,” Morris says.

In fact, Ford has been supporting the work of CANVAS since the team was organized under Radha’s leadership about five years ago. And, further reflecting the interdisciplinary spirit of this field, it's not just automotive companies who are getting behind the research.

For example, the Semiconductor Research Corporation — a consortium that includes companies such as Intel, Samsung and Texas Instruments — is also supporting the multimodal sensor fusion efforts of CANVAS.

“It’s been great working with partners in industry. They want the technology and they’re eager for results, and they help us with research directions based on what they’ll need a couple years down the line,” Morris says.