Detecting “deepfakes,” or when an existing image or video of a person is manipulated and replaced with someone else’s likeness, presents a massive cybersecurity challenge: What could happen when deepfakes are created with malicious intent?

Artificial intelligence experts from Michigan State University and Facebook partnered on a new reverse-engineering research method to detect and attribute deepfakes, which gives researchers and practitioners tools to better investigate incidents of coordinated disinformation using deepfakes as well as open new directions for future research.

Technological advancements make it nearly impossible to tell whether an image of a person that appears on social media platforms is actually a real human. The MSU-Facebook detection method is the first to go beyond standard-model classification methods.

“Our method will facilitate deepfake detection and tracing in real-world settings where the deepfake image itself is often the only information detectors have to work with,” said Xiaoming Liu, MSU Foundation Professor of computer science. “It’s important to go beyond current methods of image attribution because a deepfake could be created using a generative model that the current detector has not seen during its training.”

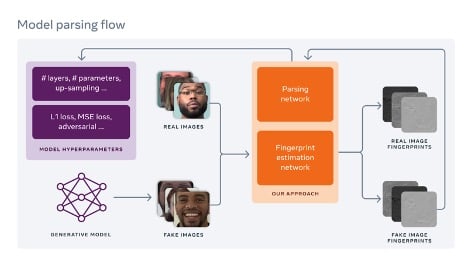

The team’s novel framework uses fingerprint estimation to predict network architecture and loss functions of an unknown generative model given a single generated image.

The team’s novel framework uses fingerprint estimation to predict network architecture and loss functions of an unknown generative model given a single generated image.

The new method, explained in the paper, “Reverse Engineering of Generative Models:

Inferring Model Hyperparameters from Generated Images,” was developed by Liu and MSU College of Engineering doctoral candidate Vishal Asnani, and Facebook AI researchers Xi Yin and Tal Hassner.

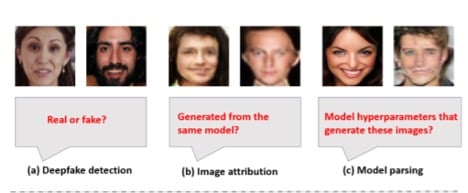

Solving the problem of proliferating deepfakes requires going beyond current methods — which focus on distinguishing a real image versus deepfake image that was generated by a model seen during training — to understand how to extend image attribution beyond the limited set of models present in training.

Reverse engineering, while not a new concept in machine learning, is a different way of approaching the problem of deepfakes. Prior work on reverse engineering relies on preexisting knowledge, which limits its effectiveness in real-world cases.

“Our reverse engineering method relies on uncovering the unique patterns behind the AI model used to generate a single deepfake image,” said Facebook’s Hassner.

“With model parsing, we can estimate properties of the generative models used to create each deepfake, and even associate multiple deepfakes to the model that possibly produced them. This provides information about each deepfake, even ones where no prior information existed.”

“With model parsing, we can estimate properties of the generative models used to create each deepfake, and even associate multiple deepfakes to the model that possibly produced them. This provides information about each deepfake, even ones where no prior information existed.”

To test their new approach, the MSU researchers put together a fake image dataset with 100,000 synthetic images generated from 100 publicly available generative models. The research team mimicked real-world scenarios by performing cross-validation to train and evaluate the models on different splits of datasets.

A more in-depth explanation of their model can be seen on Facebook’s blog.

The results showed that the MSU-Facebook approach performs substantially better than the random baselines of previous detection models.

“The main idea is to estimate the fingerprint for each image and use it for model parsing,” Liu said. “Our framework can not only perform model parsing, but also extend to deepfake detection and image attribution.”

In addition to Facebook AI, this study by MSU is based upon work partially supported by the Defense Advanced Research Projects Agency under Agreement No. HR00112090131.